The Galactic centre is one of the most dynamic places in our Galaxy. It is thought to be home to a gigantic black hole, called Sagittarius A.

January 12th 2008:

Antimatter Cloud Traced To Binary Stars. Four years of observations from the European Space Agency’s

Integral (INTErnational Gamma-Ray Astrophysics Laboratory) satellite may have cleared up one of the most vexing mysteries in our Milky Way:

the origin of a giant cloud of antimatter surrounding the galactic center. What? I look at

NASA 01.09.08 .

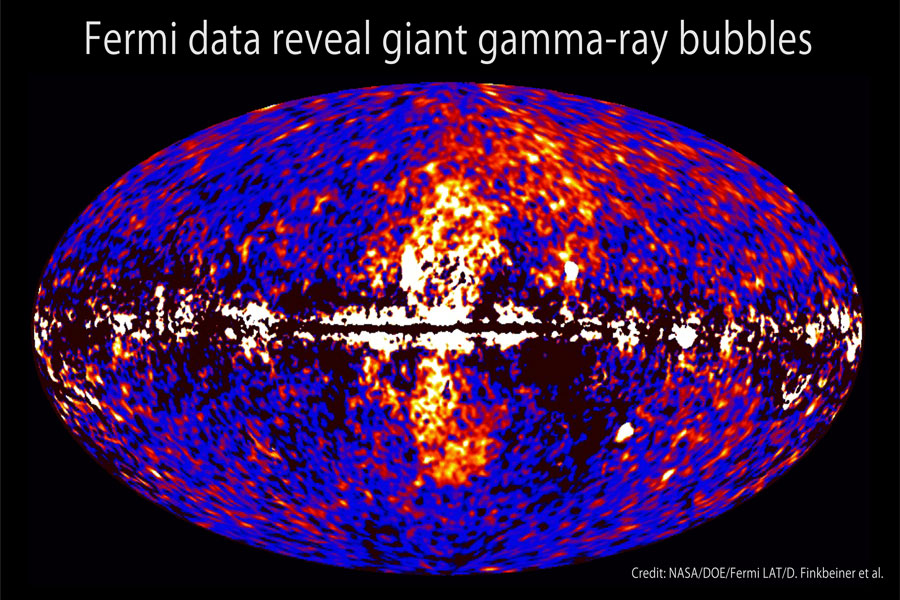

Integral mapped the glow of 511 keV gamma rays from electron-positron annihilation. The map shows the whole sky, with the galactic center in the middle. The emission extends to the right.

Integral mapped the glow of 511 keV gamma rays from electron-positron annihilation. The map shows the whole sky, with the galactic center in the middle. The emission extends to the right.Look at the

picture of M87 in the

earlier post. They show the same kind of nucleus. The cloud shows up because of the gamma rays it emits when individual particles of antimatter, in this case positrons, encounter electrons, their normal matter counterpart, and annihilate one another.

Integral found that the cloud extends farther on the western side of the galactic center than it does on the eastern side. This imbalance matches the distribution of a population of binary star systems that contain black holes or neutron stars, strongly suggesting that these binaries are churning out at least half of the antimatter, and perhaps all of it.

The detection of an asymmetry. It is lopsided with twice as much on one side of the galactic centre as the other. Such a distribution is highly unusual because gas in the inner region of the galaxy is relatively evenly distributed.

The cloud itself is roughly 10,000 light-years across, and generates the energy of about 10,000 Suns. The cloud shines brightly in gamma rays due to a reaction governed by Einstein’s famous equation E=mc^2. Negatively charged subatomic particles known as electrons collide with their antimatter counterparts, positively charged positrons. When electrons and positrons meet, they can annihilate one another and convert all of their mass into gamma rays with energies of 511,000 electron-volts (511 keV). Scientists don’t understand how low-mass X-ray binaries could produce enough positrons to explain the cloud, and they also don’t know how they escape from these systems. "We expected something unexpected, but we did not expect this," says

Skinner. The antimatter is probably produced in a region near the neutron stars and black holes, where powerful magnetic fields launch jets of particles that rip through space at near-light speed.

The antimatter cloud was discovered in the 1970s. What? Why do they then not talk about it, but say there is no dark matter? Incredible.Scientists have proposed a wide range of explanations for the origin of the antimatter, which is exceedingly rare in the cosmos. For years, many theories centered around radioactive elements produced in supernovae, prodigious stellar explosions. Others suggested that the positrons come from neutron stars, novae, or colliding stellar winds. Particles of dark matter were annihilating one another, or with atomic matter, producing electrons and positrons that annihilate into 511-keV gamma rays. But other scientists remained skeptical,

noting that the dark matter particles had to be significantly lighter than most theories predicted.

Well, we have just seen that the 'Higg is probably much lighter than expected. If it even exist at all?Integral found certain types of binary systems near the galactic center are also skewed to the west. These systems are known as hard low-mass X-ray binaries, since they light up in high-energy (hard) X-rays as gas from a low-mass star spirals into a companion black hole or neutron star. Because the two "pictures" of antimatter and hard low-mass X-ray binaries line up strongly suggests the binaries are producing significant amounts of positrons. NASA’s Gamma-ray Large Area Space Telescope (GLAST), scheduled to launch in 2008, may help clarify how objects such as black holes launch particle jets. That

paper we had just seen.

The unexpectedly lopsided shape is a new clue to the origin of the antimatter. The observations have significantly decreased the chances that the antimatter is coming from the annihilation or decay of astronomical dark matter. Some astronomers have suggested that exploding stars could produce the positrons. This is because radioactive nuclear elements are formed in the giant outbursts of energy, and some of these decay by releasing positrons. However, it is unclear whether these positrons can escape from the stellar debris in sufficient quantity to explain the size of the observed cloud.

Not from dark matter?Dark matter is thought to exist throughout the Universe – undetectable matter that differs from the normal material that makes up stars, planets and us. It is also believed to be present within and around the Milky Way, in the form of a halo.

The recent study has found that the ‘positrons’ fuelling the radiation are not produced from dark matter but from an entirely different, and much less mysterious, source: massive stars explode and leave behind radioactive elements that decay into lighter particles, including positrons.

The reasoning behind the original hypothesis was that positrons, being electrically charged, would be affected by magnetic fields and thus would not be able to travel far. As the radiation was observed in places that did not match the known distribution of stars, dark matter was invoked as an alternative for the origin of the positrons.

But the recent finding by a team of astronomers led by Richard Lingenfelter at the University of California at San Diego, proves otherwise. The astronomers show that the positrons formed by radioactive decay of elements left behind after explosions of massive stars are, in fact, able to travel great distances, with many leaving the thin Galactic disc.

We can convert pure energy into

equal amounts of matter and antimatter. Matter and antimatter can also convert back to energy. In a universe with equal amounts of matter and antimatter, we’d expect most of it to annihilate. We know that particles called neutral B mesons can fluctuate from matter to antimatter and back again, like a seesaw going up and down.

New physics?SkiDaily aug 2010: The Tevatron experiments DZero found that colliding protons in their experiment produced

short-lived B meson particles that almost immediately broke down into debris that included slightly more matter than antimatter. The two types of matter annihilate each other, so most of the material coming from these sorts of decays would disappear, leaving an excess of regular matter behind. But the truly exciting implication is that the experiment implies that there is new physics, beyond the widely accepted Standard Model, that must be at work. If that's the case, major scientific developments lie ahead.

The explanation for the dominance of matter in the present day universe is that the CP violation treated matter and antimatter differently. New result provides evidence of deviations from the present theory in the decays of B mesons, in agreement with earlier hints,so the measurement is consistent with any known effect is

below 0.1 percent (3.2 standard deviations). When matter and anti-matter particles collide in high-energy collisions, they turn into energy and produce new particles and antiparticles. At the Fermilab proton-antiproton collider, scientists observe hundreds of millions every day.

Both CDF (top quark) and

DZero (bottom quark) therefore continue to collect data.

Borissov, from Lancaster University in England, was recognized by the UK society for his work on B physics.

He was also part of a team whose work received media attention, including a front page article in the

New York Times, in May of 2010 when DZero found evidence of a bias toward matter over antimatter. This observation, called

dimuon charge asymmetry, could help to explain why the universe as we know it is composed of matter. “The Standard Model is not able to explain why we have the world around us.”

But there is also a parity asymmetry?

Dark matter and antimatter relations.Here I will explain the difference between matter, anti matter, dark matter, and negative matter in a concise and understandable way, say

Hontas Farmer. Dark matter only interacts by way of gravity and the weak atomic force. Dark matter does not interact via the strong atomic force hence dark matter cannot be seen. It only interacts via the weak force which is what keeps neutrons and protons inside the nucleus of atoms together. Such is why

experiments to detect dark matter directly rely on a particle of dark matter bumping into a particle of matter.

But that is difficult without some strong force in the surroundings, or that all the forces vanish?

Antimatter as dark matter?It is conceivable that the dark matter (or at least part of it) could be antimatter, but there are very strong experimental reasons to doubt this. For example, if the dark matter out there were antimatter, we would expect it to annihilate with matter whenever it meets up with it, releasing bursts of energy primarily in the form of light. We see no evidence in careful observations for that, which leads most scientists to believe that whatever the dark matter is, it is not antimatter.

"It would take longer than the age of the universe to make one gram of antimatter."

Dark matter is not necessarily anti-matter. Dark matter is matter that for some reason doesn't emit or reflect light. It could be purely transparent, never interacting with light. Invisibility is a change of Planck constant? Antimatter goes to the past is also said. What would that mean? We cannot see the past?

The largest part of dark matter which does not interact with electromagnetic radiation is not only "dark" but also by definition utterly transparent; in recognition of this, it has been referred to as transparent matter by some astronomers".Water is also transparent.

Antimatter could be anyons? That is seen in superconduction and condensed matter physics. "Some exotic particle-like structures known as anyons appear to entwine in ways that could lead to robust quantum computing schemes", according to

Kirill Shtengel. The anyons the researchers believe they have created are not true particles that can exist on their own, like electrons or protons. Instead anyons are quasiparticles that exist only inside a material, but move in ways that resemble free particles. Although braids in three dimensions unravel easily, braids trapped in two dimensions can't pull apart, which means they're able to withstand disturbances that would scramble the data and calculations in other quantum computers.

Wilczek.

Quantum Computers and Anyons, in Edge Bio page.I am very fond of anyons because I worked at the beginning on the fundamental physics involved. It was thought, until the late 70s and early 80s, that all fundamental particles, or all quantum mechanical objects that you could regard as discrete entities fell into two classes: so-called bosons after the Indian physicist Bose, and fermions, after Enrico Fermi. Bosons are particles such that if you take one around another, the quantum mechanical wave function doesn't change. Fermions are particles such that if you take one around another the quantum mechanical wave function is multiplied by a minus sign. It was thought for a long time that those were the only consistent possibilities for behavior of quantum mechanical entities. In the late 70s and early 80s, we realized that in two plus one dimensions, not in our everyday three dimensional space (plus one dimension for time), but in planar systems, there are other possibilities. In such systems, if you take one particle around another, you might get not a factor of one or minus one, but multiplication by a complex number—there are more general possibilities. More recently, the idea that when you move one particle around another, it's possible not only that the wave function gets multiplied by a number, but that it actually gets distorted and moves around in bigger space, has generated a lot of excitement. Then you have this fantastic mapping from motion in real space as you wind things around each other, to motion of the wave function in Hibert space—in quantum mechanical space. It's that ability navigate your way through Hilbert space—that connects to quantum computing and gives you access to a gigantic space with potentially huge bandwidth that you can play around with in highly parallel ways, if you're clever about the things you do in real space.

But in anyons we're really at the primitive stage. There's very little doubt that the theory is correct, but the experiments are at a fairly primitive stage—they're just breaking now.

Back to the blackboard?

Reference:

‘An asymmetric distribution of positrons in the galactic disk revealed by gamma rays’ by Georg Weidenspointner et al. 10 January, in Nature.

Lingenfelter, R.E., Higdon, J.C. Rothschild, R.E.

Is There a Dark Matter Signal in the Galactic Positron Annihilation Radiation? Physical Review Letters, 2009; 103 (3): 031301 DOI:

10.1103/PhysRevLett.103.031301The D0 Collaboration: V.M. Abazov, et al.

Evidence for an anomalous like-sign dimuon charge asymmetry.

Physical Review D, 2010. http://arxiv.org/abs/1005.2757

R. L. Willett, L. N. Pfeiffer, K. W. West.

Alternation and interchange of e/4 and e/2 period interference oscillations consistent with filling factor 5/2 non-Abelian quasiparticles.

Physical Review B, 2010; 82: 205301 DOI:

10.1103/PhysRevB.82.205301Kirill Shtengel.

Non-Abelian anyons: New particles for less than a billion? APS Physics, 2010; 3 (93) DOI:

10.1103/Physics.3.93The Fermilab Tevatron experiments: the APS journals

Physical Review Letters and Physical Review D.

Aug. 17, 2010 , and

14 may 2010.

The DZero result is based on data collected over the last eight years by the DZero experiment at Fermilab. Besides Ellison, the UC Riverside co-authors of the paper, submitted for publication in

Physical Review D, are Ann Heinson, Liang Li, Mark Padilla, and Stephen Wimpenny.

http://www-d0.fnal.gov/Run2Physics/WWW/results/final/B/B10A/

Cern, antimatter, Mirror of the universe, links.